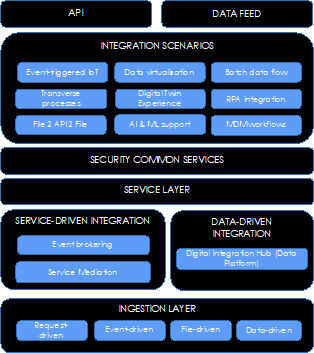

APIs are very often put forward in the field of integration and middleware. However, they cannot represent all the diversity of integration patterns on their own. Several integration technologies must be combined to cover all use cases. For example, we can bring together the two major integration market patterns (modular logic carried by API Management and mass logic carried by Data Integration) to form a common integration approach: a second-generation hybrid integration platform.

Thus, the Service/API approach and the Data approach can be brought together through ingestion, security, and especially integration scenarios. We will specify these different levels of integration in this article.

We concluded the first part of this article by introducing the topic of integration scenarios, which come with middleware structuring.

For years, companies have stacked integration capabilities, several generations of technologies, which are sometimes redundant. We intervened at the end of 2019 in a company in the building distribution sector. This company was accumulating twelve middleware solutions, without any governance.

As part of this project, we made recommendations, grouped them in a middleware and integration master plan, which defined the company’s integration strategy.

It recommended merging within a single platform:

● Digital Integration capabilities, centered on APIs, based on real-time and messages,

● Data Integration capabilities, focused on data, based on volume, push, streaming.

The objective is to be able to:

● Mix the ability to do unit and volume, transactional and analytical tasks, according to the use cases.

● Rationalize the legacy middleware and reduce the technical debt from previous generations, using an adapted platform.

This trend can also be seen in Asia, where strong growth, the key role of e-commerce, and partner ecosystems are driving this type of development, along with the strengthening of architecture, a well-known discipline.

This type of platform is based on an assembly of choices, united by a DevOps model. Our job is generally to build and deploy this unified view, regardless of the chosen technologies. The reason is that there is no vendor today that can provide an end-to-end solution.

Moreover, as we see with Data Platforms, companies are reluctant to commit to a single vendor on such strategic projects anyway.

This is where the notion of integration scenarios comes in. These are pre-identified and pre-wired use cases that we are sure to find from one company to another, and models that will technically apply to the platform.

In the figure above, we have listed nine scenarios, but there are many more:

● Event-triggered IoT: the objective is to capture event information from connected objects and correlate them to increase readability. This correlation occurs naturally via the application of Data Science/Machine Learning algorithms, which themselves trigger other events to be pushed to subscribers,

● Data Virtualization: Data virtualization is an integration pattern that is generally based on a set of technologies dominated by the use of SQL. These patterns can be covered by a platform of this type. The advantage is to ensure that Data Virtualization is not separate from the integration strategy, or comes as an additional technology.

● Batch Data Flow: this involves building up data aggregates, enriched by orchestrations, and made available in batches to consumers,

● Transversal processes: these are processes that describe the life cycle of any information in the information system, and for which there are business performance metrics that only the platform can determine due to its transversality,

● Digital Twin Experience: It is built around the Event-Triggered IoT scenario. The events received will be sent to the digital twin for a real-time interpretation of its operation. This scenario is then enriched with meta-data, to converge the events towards a unique view of the twin, which justifies the connection to an enterprise architecture repository or a Data Catalog.

● RPA Integration: using an RPA solution sometimes requires access to APIs exposed by the platform, to avoid surface automation that can cause information synchronization issues. This scenario is also a way to control data quality in the different sources by comparing them and reporting discrepancies.

● File 2 API 2 File: files have not disappeared, and it is sometimes necessary to transform a file into as many messages or to aggregate messages into a file, or sometimes both in end-to-end processing. This also allows bridging the gap between the historical legacy and the new means of exchange.

● AI & ML support: this scenario aims to supply the algorithms dealing with the databases local to the platform, with the necessary and sufficient information to enable learning. These scenarios appear when the integration platform plays the role of a full-fledged Data Platform.

● MDM Workflows: the creation and consumption of reference data can be handled by the platform.

These scenarios help to define an organization’s integration strategy, the profile of a federated integration platform, and to select the best components for use.

In many cases, an audit of the existing system will be necessary to determine the extent of the technical debt and how to resolve it through correction and migration scenarios that are inseparable from the development of this new asset.